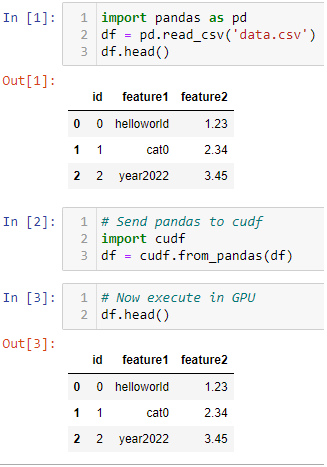

Gilberto Titericz Jr on Twitter: "Want to speedup Pandas DataFrame operations? Let me share one of my Kaggle tricks for fast experimentation. Just convert it to cudf and execute it in GPU

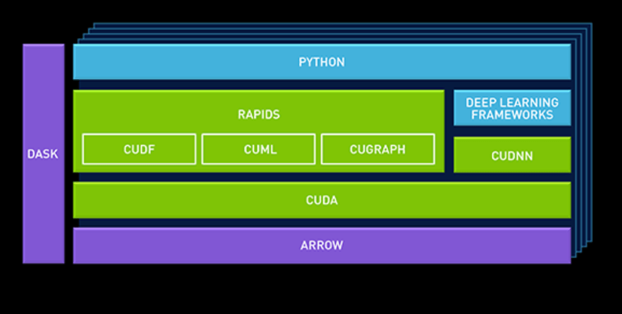

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

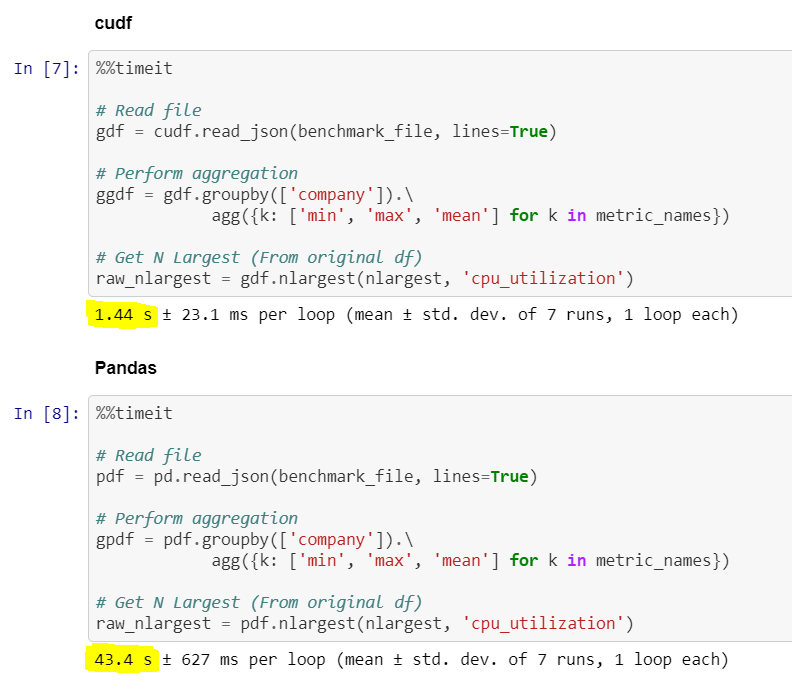

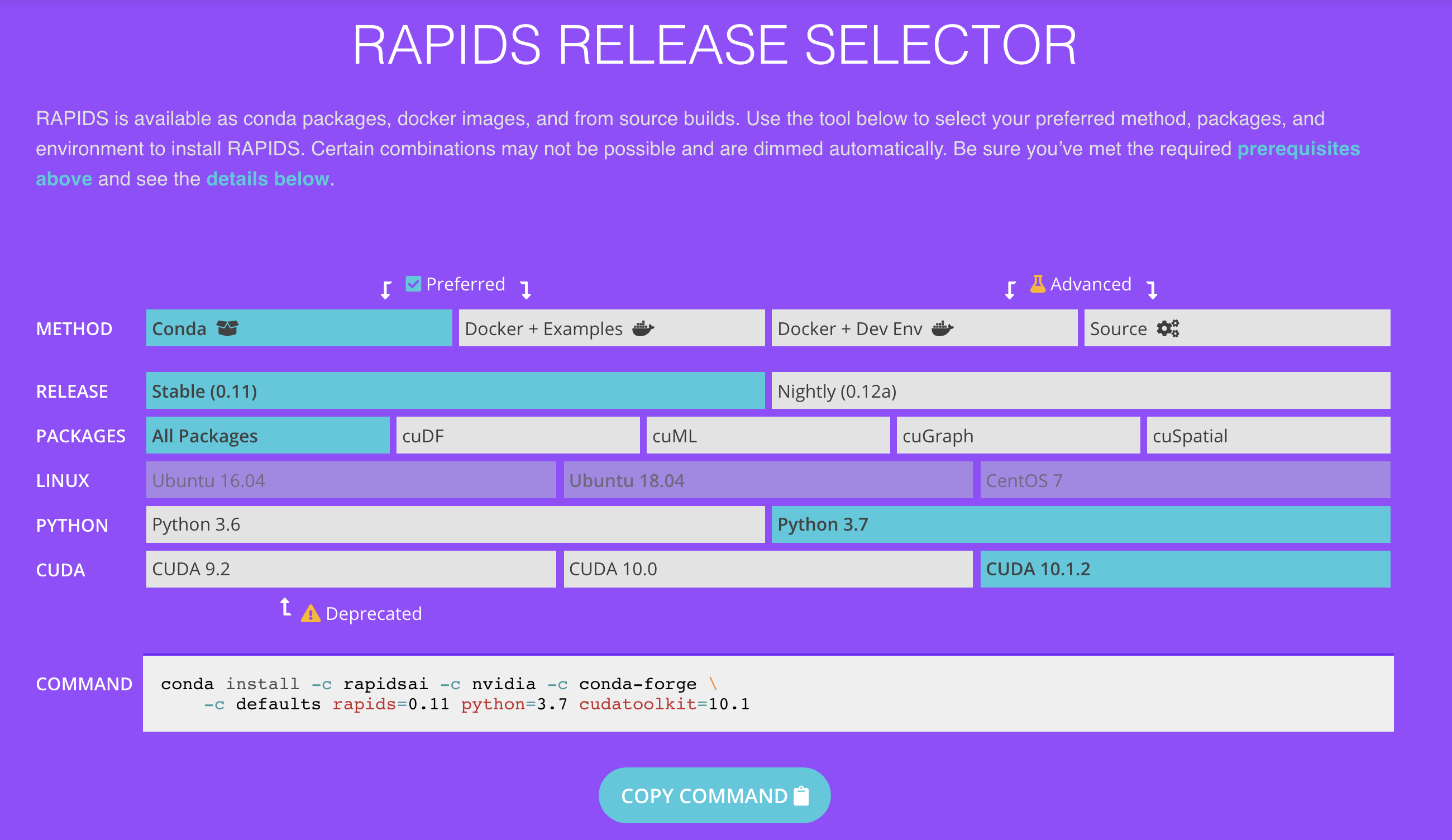

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

Python Pandas Tutorial – Beginner's Guide to GPU Accelerated DataFrames for Pandas Users | NVIDIA Technical Blog

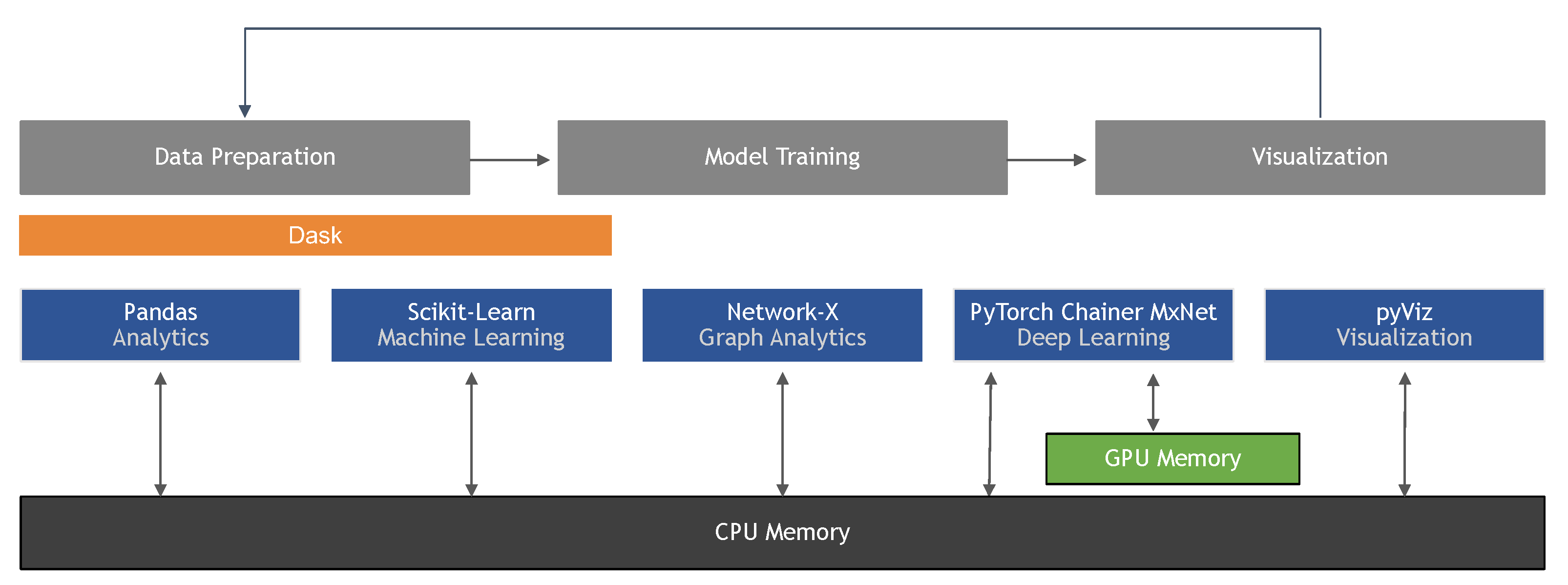

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence | HTML

.jpg?format=webp)